When the AI Governance Framework Didn't Catch the Violation

The framework didnt catch the violation. A human did. Me.

Nino Chavez

Product Architect at commerce.com

Is what I’m doing all smoke and mirrors?

Sometimes it feels that way. Even with a working governance framework for AI-generated code—and even when we’re scoping things into reasonable units of work—stuff still slips through. It shouldn’t. But it does.

This time, the framework didn’t catch the violation. A human did. Me.

We dropped the framework into an existing project and got rolling. But I started noticing red flags. I called them out directly in the system, and the agent responded—not defensively, but constitutionally.

What the Agent Identified

Blueprint primacy violation: a “bypass” solution was implemented without any blueprint, no corresponding annotation. Violated: “No code shall be generated without a corresponding blueprint specification.”

Traceability violation: band-aid fix introduced without blueprint trace, no change reasoning documented in framework terms, skipped constitutional change classification.

Safety principle violation: no fallback mechanism or error handling, dangerous bypass masked future auth issues, breached safety requirements of the system.

Copilot instructions violation: “You are a compiler for intent—not a guesser.” The agent guessed at a bypass fix instead of addressing root intent, introduced unvalidated logic, violated directory structure and operational discipline rules.

What Should Have Happened

Create a diagnostic blueprint for environment validation. Classify the issue correctly as infra versus code. Run config validation tools first. Provide a traceable, documented fix aligned with framework principles.

The Uncomfortable Truth

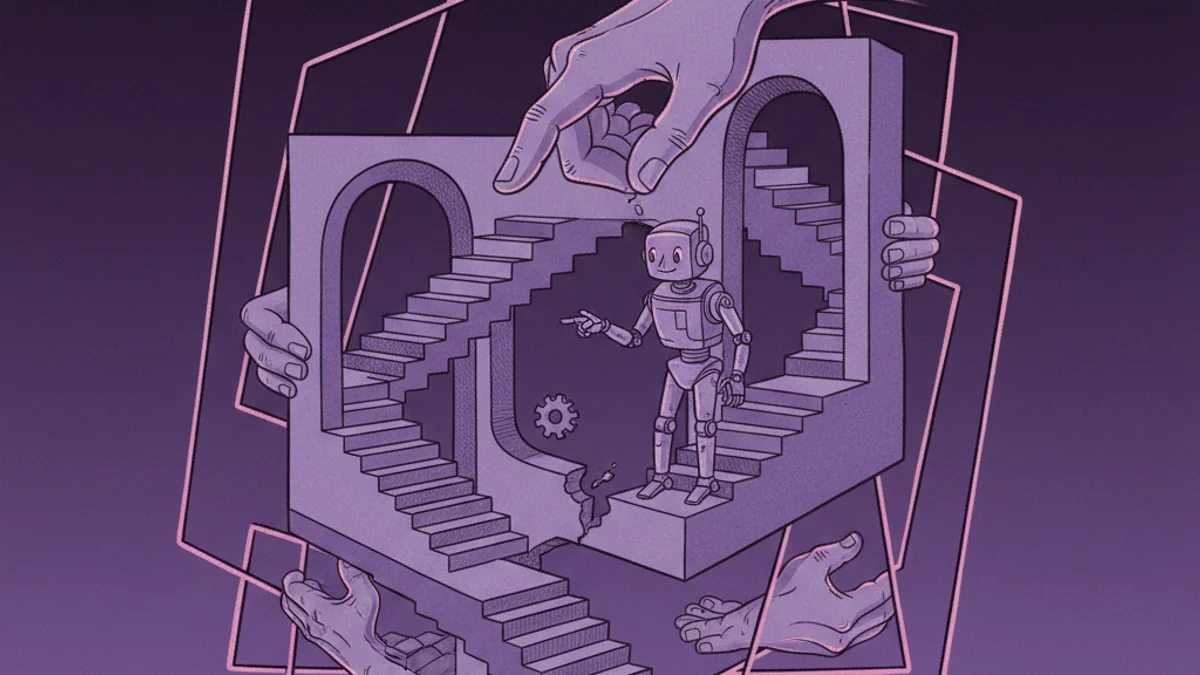

This is why I built the Aegis Framework. Not for governance theater—but to detect drift, prevent technical debt, and enforce intent-driven development.

Calling this out was uncomfortable, but necessary. The framework should have flagged it. Until it does—I have to. And sometimes that feels like a losing battle.

I’m still not sure whether the framework needs better detection logic, or whether some violations are just inherently hard to catch automatically. Probably both. The gap between what the framework promises and what it actually prevents is the space where I’m still doing manual work.

Originally Published on LinkedIn

This article was first published on my LinkedIn profile. Click below to view the original post and join the conversation.

View on LinkedIn